Did you know that AMD's old Zen 3 architecture, released back in late 2019, is actually faster for gaming than Intel's latest Raptor Lake architecture used for their 13th and 14th generation Core series processors? It's true, and AMD has graphs to prove it. Take a look at this...

The Ryzen 9 5950X, and now also the 5900XT, is worst-case as good as a Core i7-13700K, and best-case, it's about 4% faster. That's impressive, right?

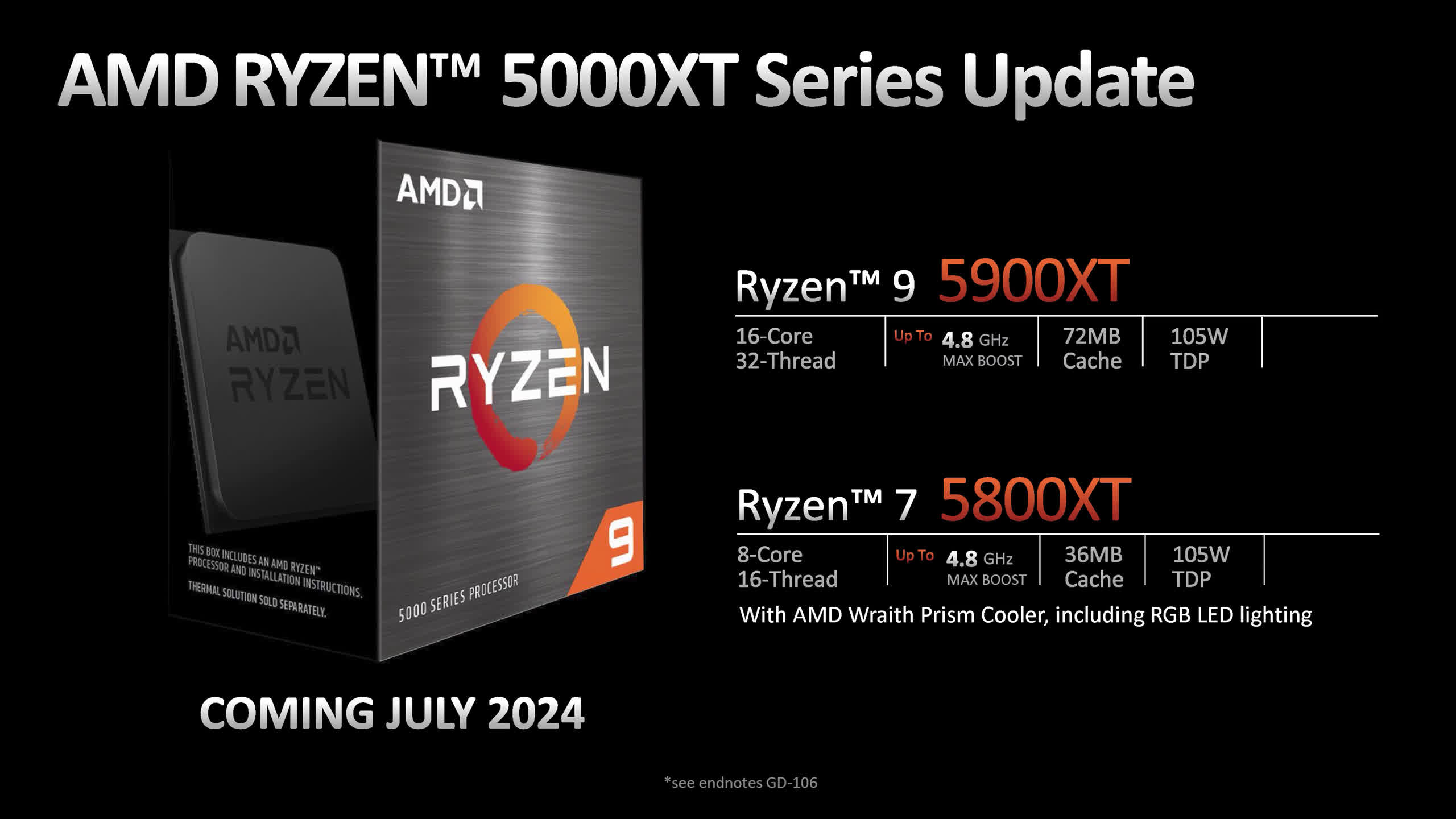

Indeed, it's awesome. The 13700K still costs at least $330, while the 5950X is $360. Therefore, we can probably assume that the upcoming 5900XT will be even cheaper. Initially, AMD said it was going to be $359, but then they quickly walked that back, probably after realizing that announcing pricing for upcoming processors while being overly enthusiastic was a bad idea. If only the Radeon division was that measured.

AMD also announced the 5800XT at $250 but later revised that as well. They must have been high as a kite when they came up with that price because there's no other explanation for charging 25% more than the 5800X for a mere 2% increase in clock speed. It's okay, though; we've all made mistakes, and we're going to allow AMD to come back later with more reasonable pricing.

If you watched the recent HUB Q&A series where we called out AMD for their misleading and anti-consumer marketing BS, you might be thinking, "Steve, stop flogging that dead horse; we get it, AMD was naughty." To that, we say, we're going to flog it some more, and it's going to be educational.

Now, if you weren't fortunate enough to have watched our Q&A series this month, let us quickly fill in the blanks. During the Computex trade show, AMD announced their upcoming Zen 5 processor series. In a press deck sent to the media, they also announced 'new' but not really new Zen 3 processors – essentially binned versions of silicon they were already selling, called the 5900XT and 5800XT.

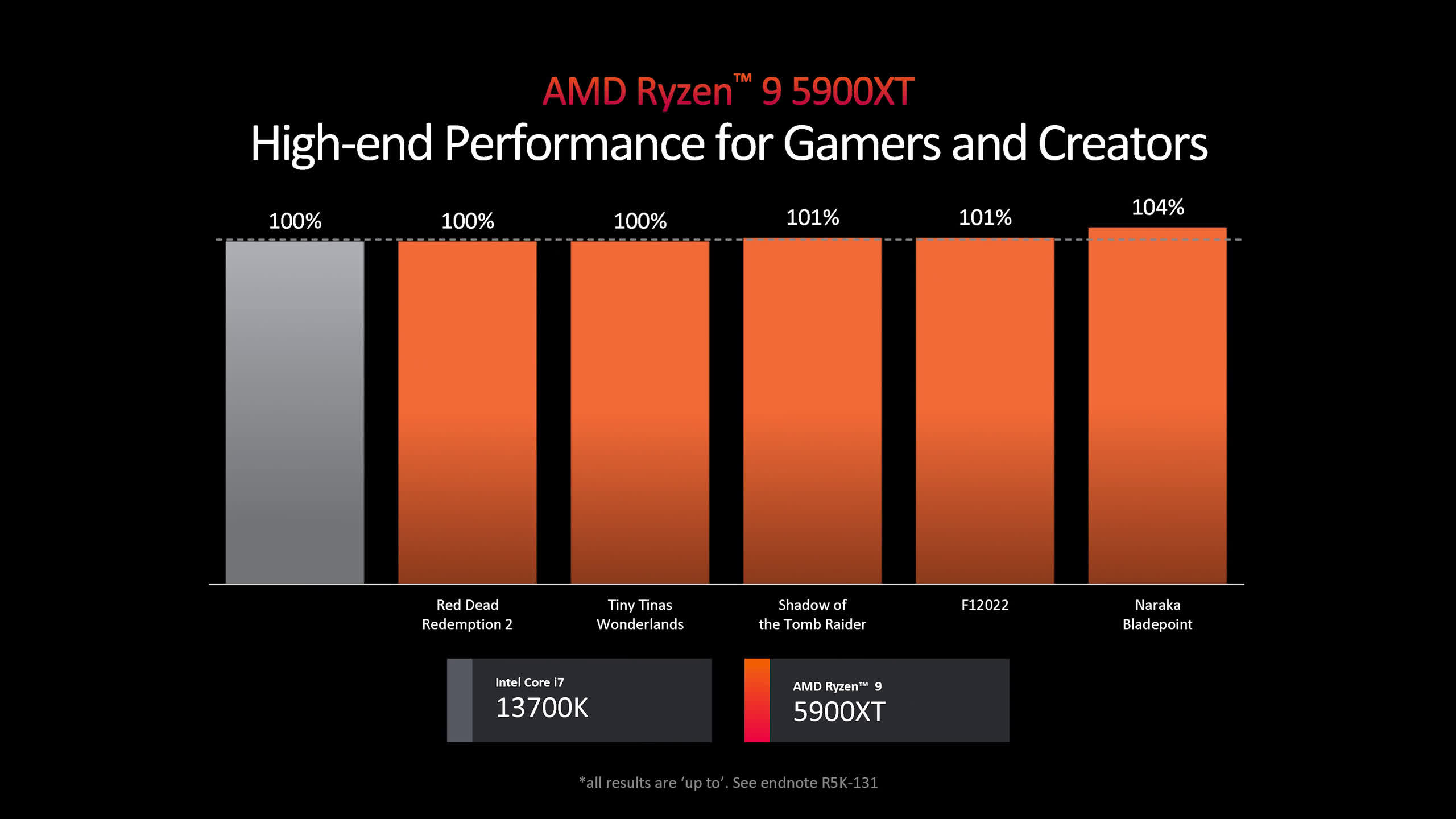

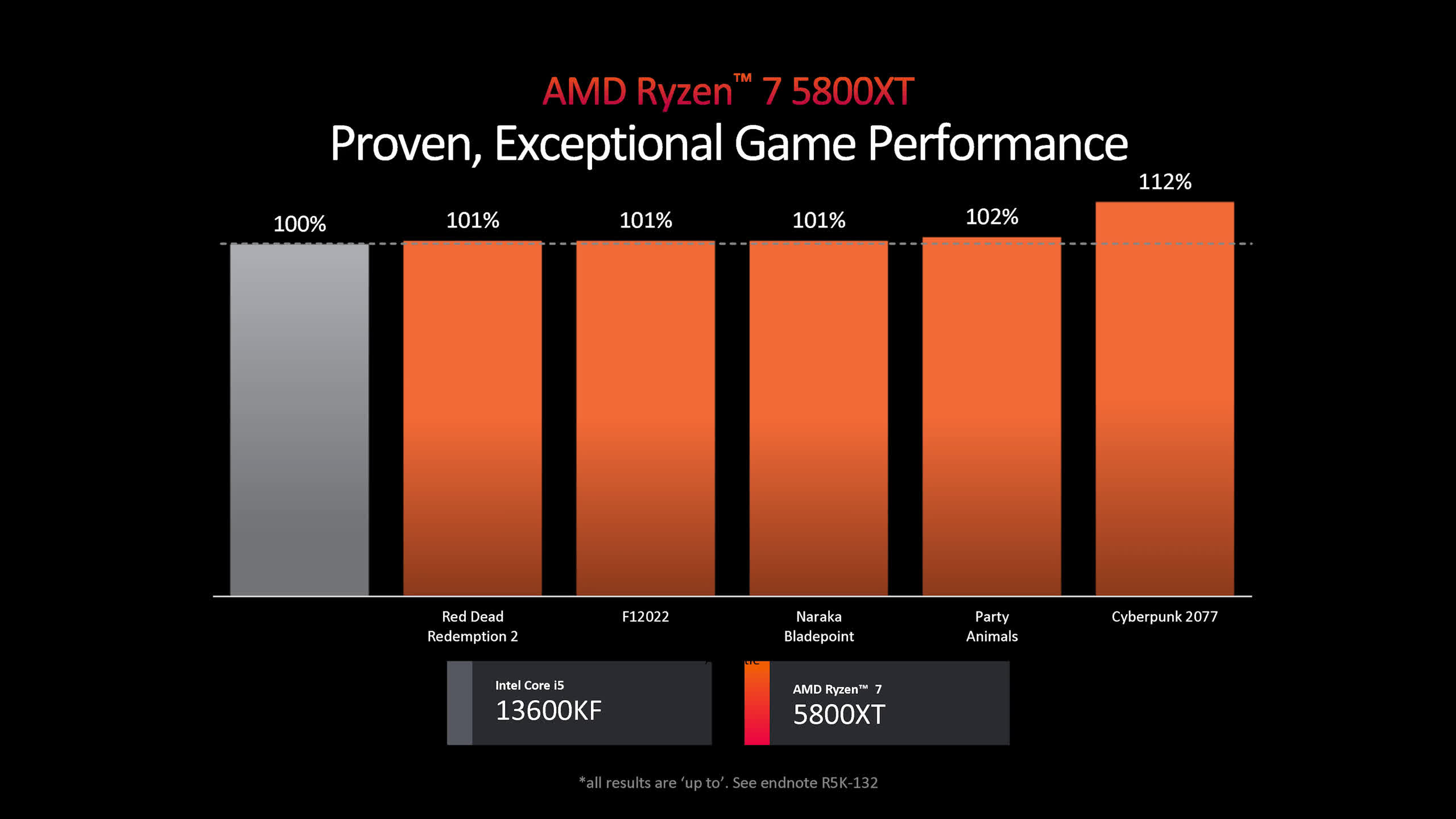

These CPUs on their own are fine – not exciting, but acceptable. The problem is that AMD included two performance slides for these CPUs, claiming these binned Zen 3 parts are as fast or slightly faster for gaming than Intel's Core i7-13700K and Core i5-13600KF. That's a whopper of a lie.

To achieve this deception, AMD heavily GPU-limited their CPU testing, pairing all CPUs with the Radeon RX 6600, an entry-level, previous-generation GPU that's now three years old. This effectively levels the playing field, neutralizing any potential performance differences between the CPUs, resulting in nothing more than an RX 6600 benchmark.

Benchmarking CPUs with a heavy GPU bottleneck is a bad idea as it tells you nothing about how the CPU really performs in games. It's made even worse when basic information like the average frame rate is omitted. For all we know, the Radeon RX 6600 could have been rendering just 30 fps.

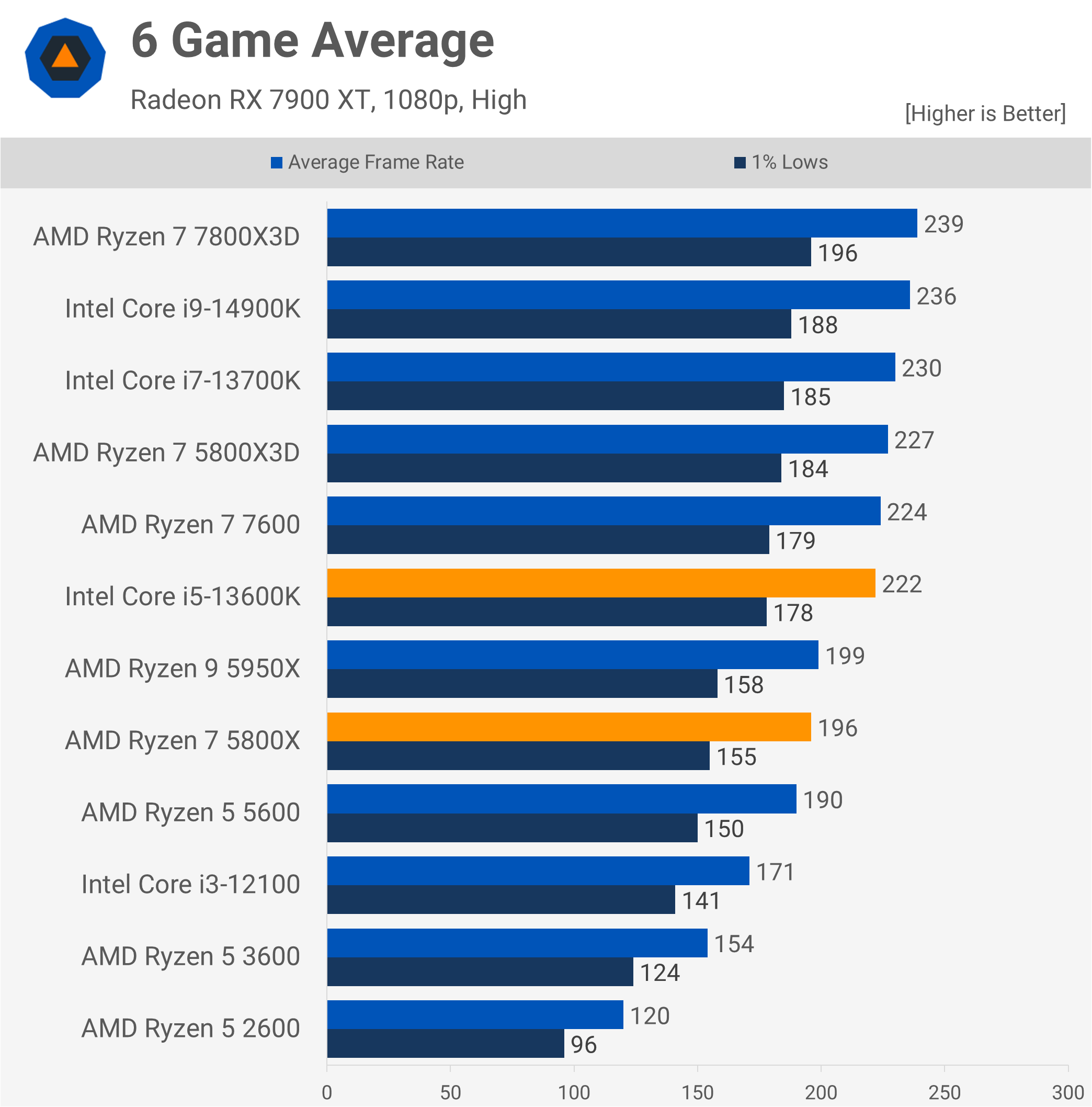

To investigate further, we've included some data with a more reasonable GPU, the Radeon RX 7900 XT. This might be a high-end product right now, but by early next year, we have it on good authority that this is going to be mid-tier performance.

For testing, we'll use the games AMD benchmarked with, at 1080p using the highest quality preset, but with upscaling and any ray tracing options disabled. AMD included an odd range of games, such as Party Animals, which we had to purchase on Steam specifically for this article. Not to worry, my 11-year-old daughter has been enjoying it.

We've also gone and tested a dozen very different CPUs as we believe this illustrates how silly testing CPUs with a strong GPU bottleneck is. So, let's get into it…

Benchmarks

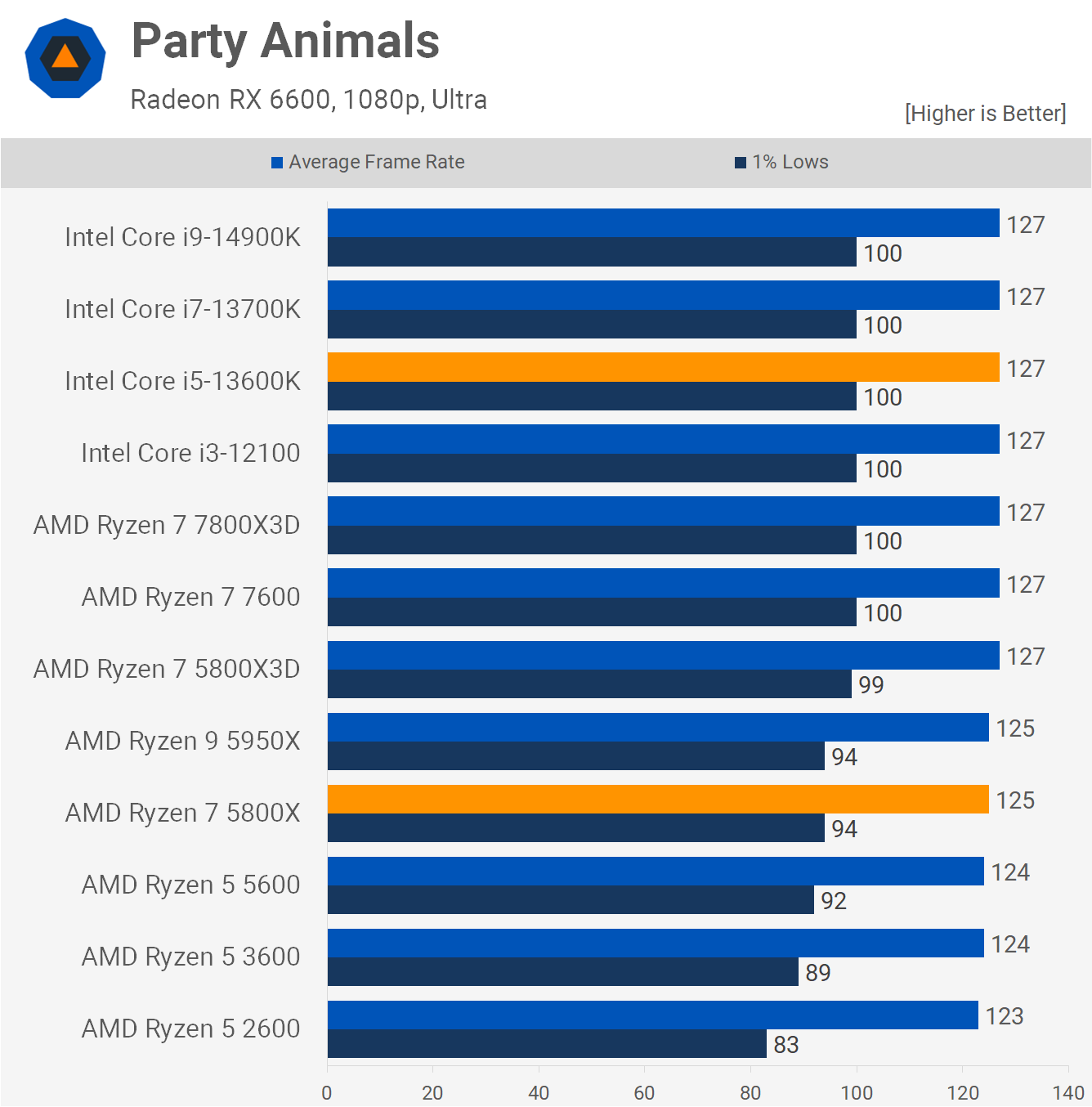

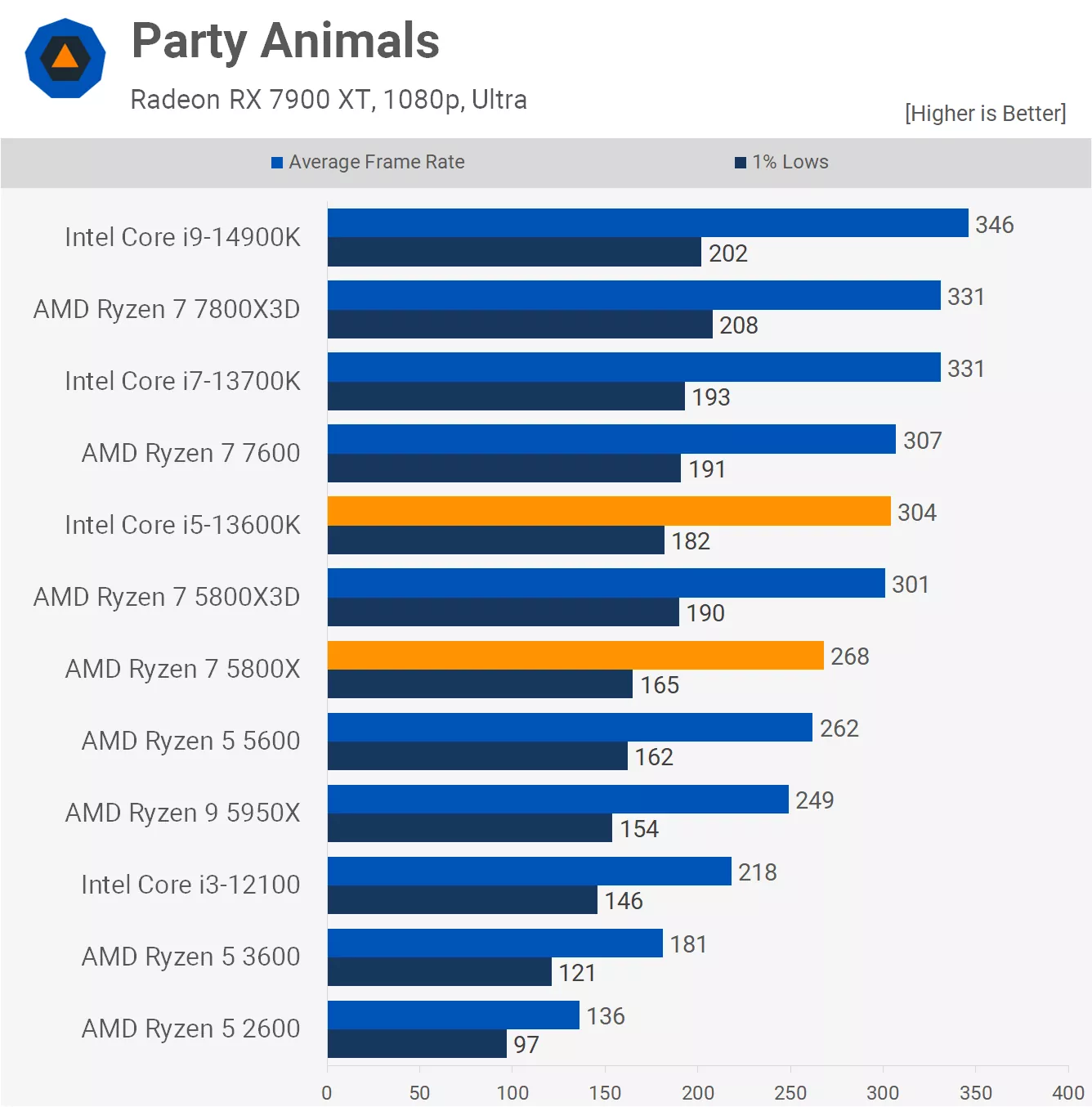

Let's start with everyone's favorite video game, Party Animals, using the Radeon RX 6600, which is surprisingly powerful enough to deliver high-refresh performance in this title. In that sense, AMD hasn't been too dodgy here, except for the fact that this is a terrible game for CPU benchmarking as it barely uses the CPU, and the RX 6600 is still a strong bottleneck for any relatively modern or high-end CPU.

Now, AMD didn't test the 5900XT with Party Animals, but they did with the 5800XT, and they claimed the 5800XT was 2% faster than the Core i5-13600K. However, in our testing, we found the Core i5 to be 2% faster. Maybe the 2% boost to core clocks will help the 5800XT here, but we don't suspect it will be enough to pull ahead. Also, if we look at the 1% lows, which AMD didn't test or at least didn't include data for, the Core i5 is 6% faster.

But what if we re-test with the Radeon RX 7900 XT? Well, things change quite a bit. Now, the 13600K is 13% faster than the 5800X when comparing the average frame rate.

This is still a poor title for testing the gaming performance of CPUs because it isn't very CPU demanding, but at least this more CPU-limited data provides us with a bit more insight into how these CPUs really perform.

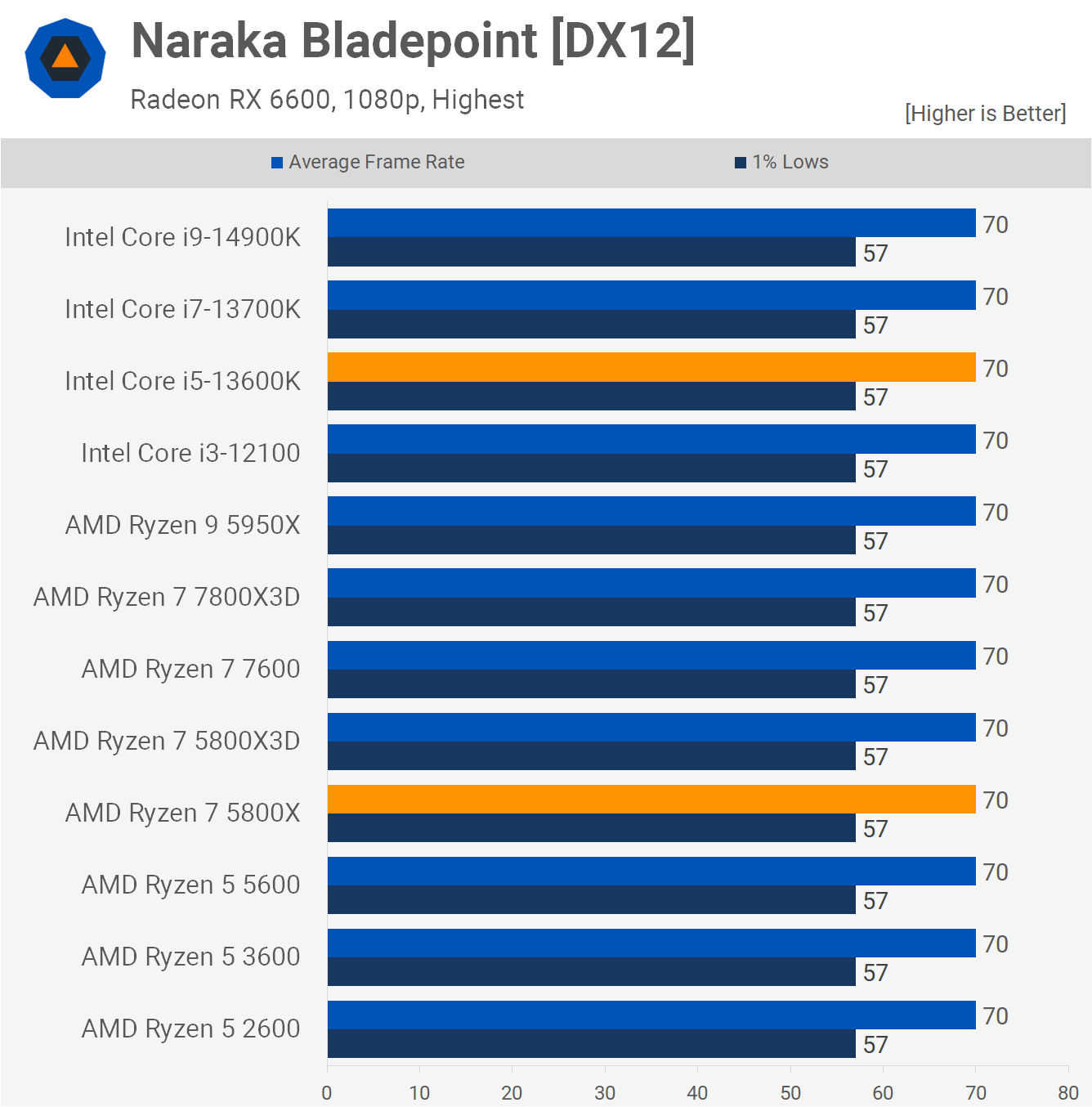

Naraka Bladepoint is another game we haven't used for testing before, but this one seems a bit more legitimate as a CPU benchmark – assuming you're not using a Radeon RX 6600. Seriously, though, this one is super dodgy by AMD. Using an RX 6600, you're as GPU bound as you possibly could be; even the Ryzen 5 2600 is able to match the 7800X3D and 14900K. So, this data is extremely useless, and it blows our mind how often we see requests for this sort of CPU testing.

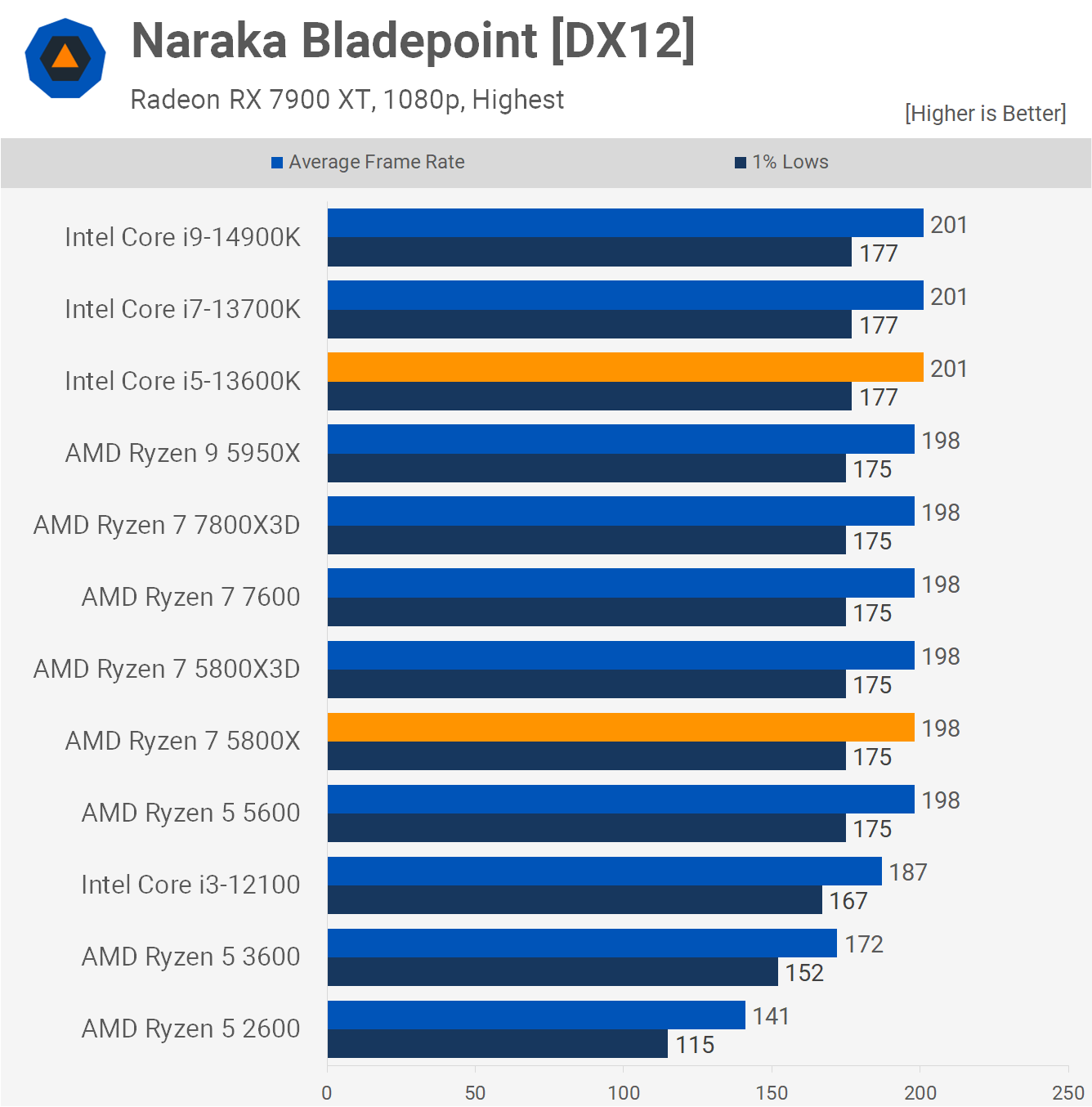

Even with the 7900 XT installed, the game is still, for the most part, GPU limited, so Naraka Bladepoint doesn't appear to be a great CPU benchmark. Oddly, AMD used this game to claim that the 5900XT is 4% faster than the 13700K, which is really odd because with the RX 6600, there's simply no chance that's true. With a faster GPU like the 7900 XT, the Intel processor is actually a few percent faster. But yeah, not a great CPU benchmark, this one.

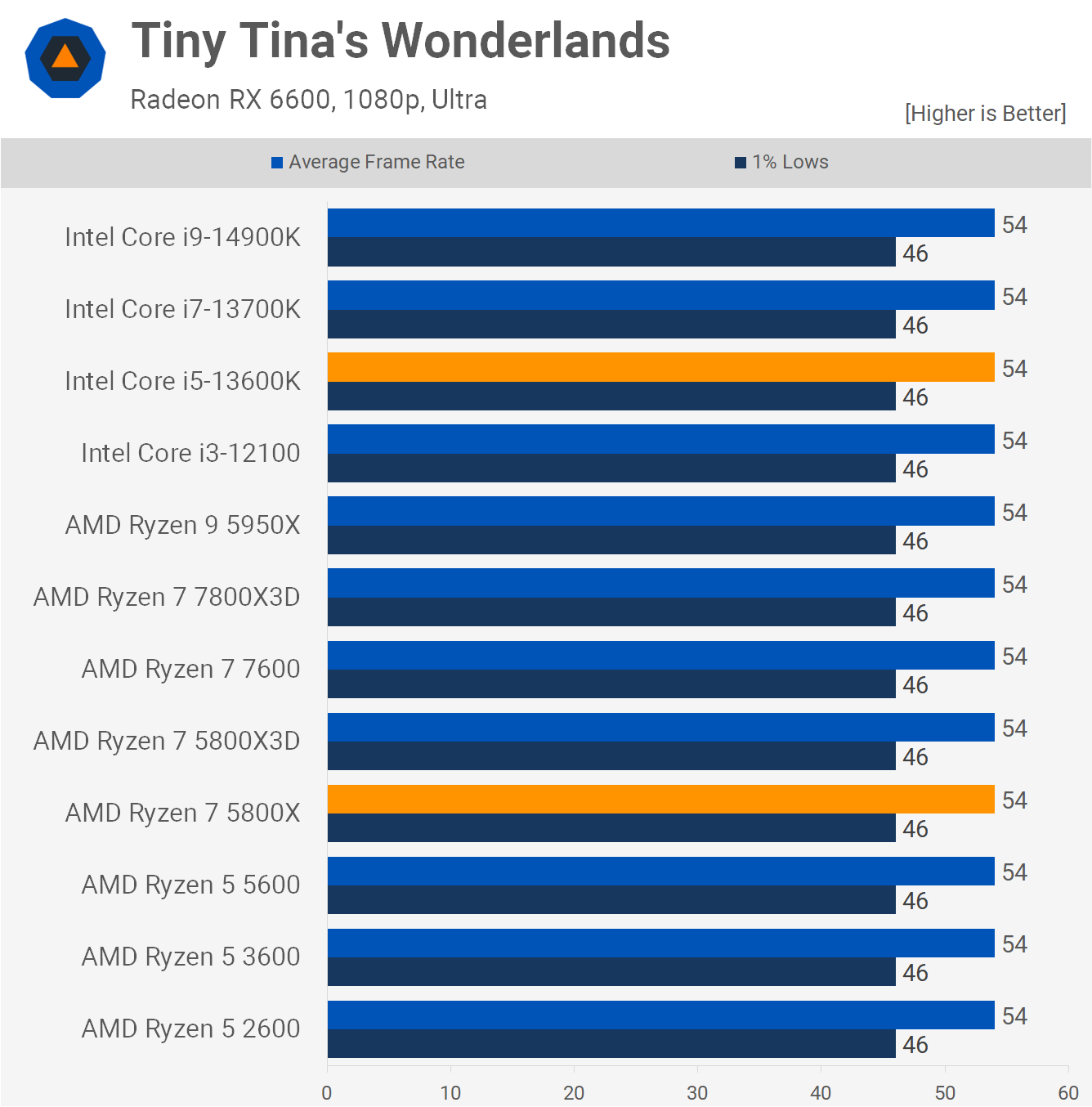

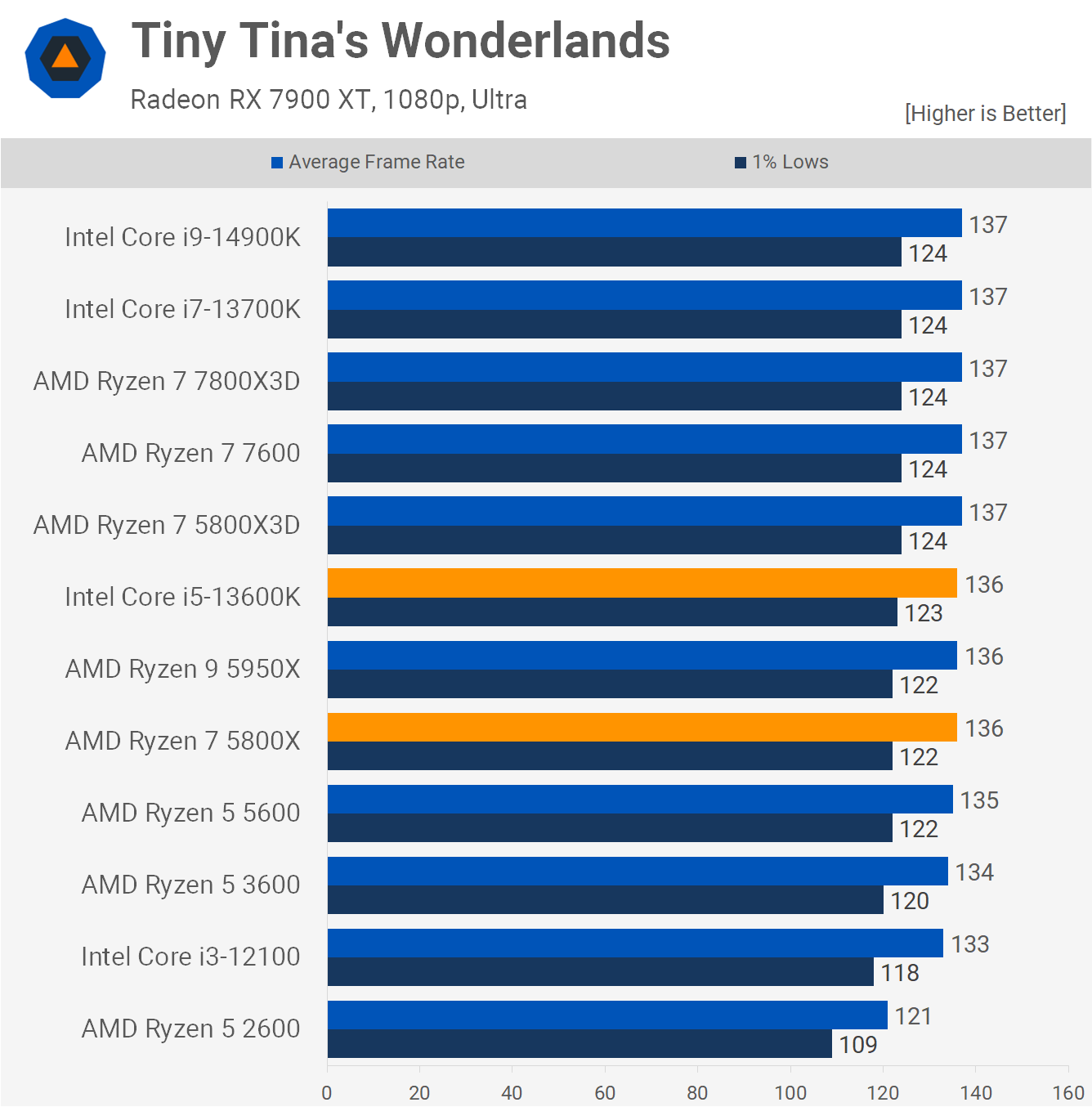

Tiny Tina's Wonderlands is a game we have used in the past for CPU benchmarking but quickly dropped after it became apparent that it wasn't demanding enough for testing modern processors, especially when using an RX 6600. AMD did claim no performance difference between the upcoming 5900XT and 13700K in this title, and we see that's certainly true – again when using a previous-generation entry-level GPU.

Even with the 7900 XT installed, we see that Tiny Tina's Wonderlands just isn't that CPU demanding, with no real performance drop-off until we drop down to the super old Ryzen 5 2600. So again, AMD used another game that doesn't really stress the CPU and then ensured that it was GPU limited anyway by using the RX 6600.

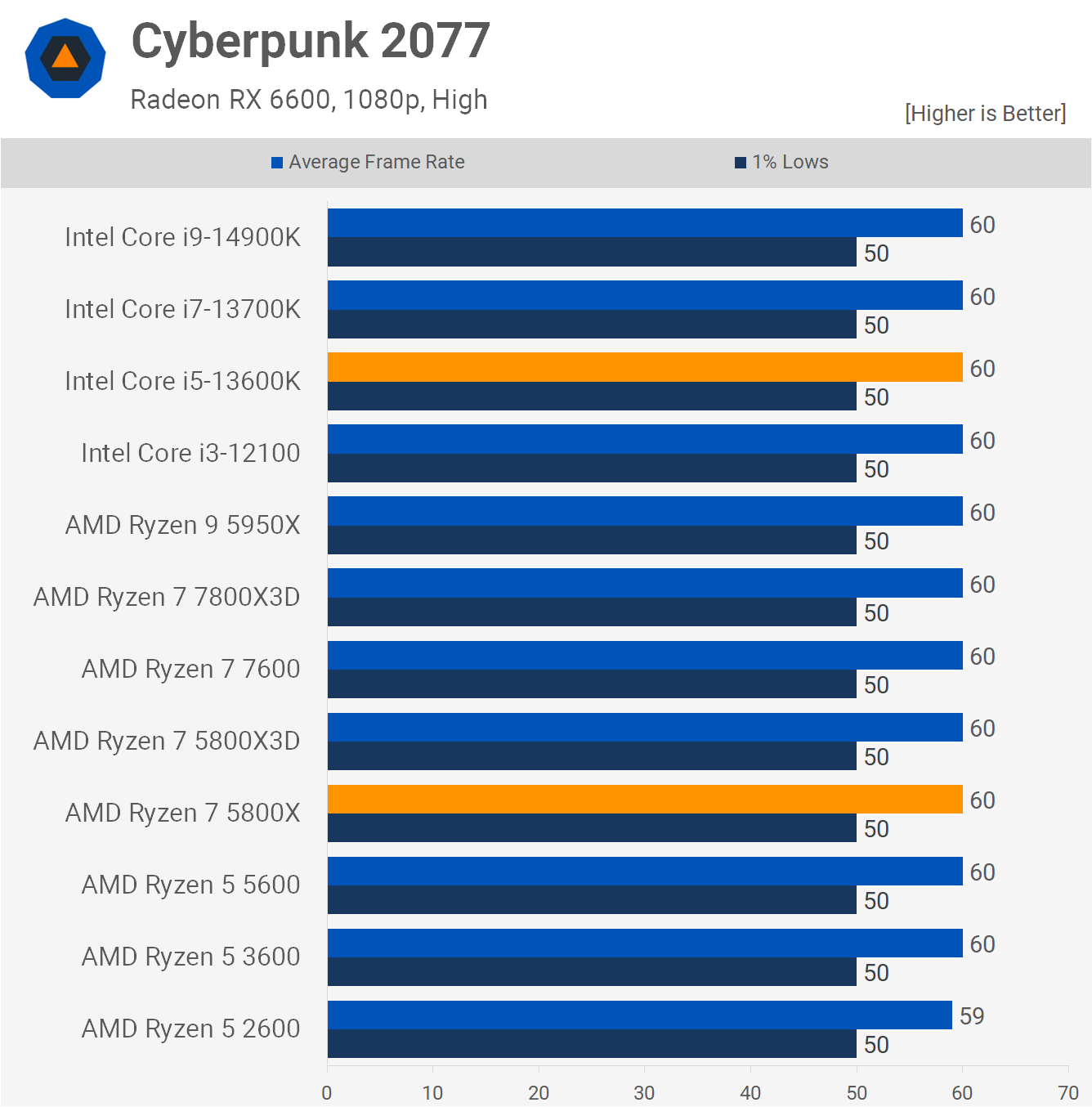

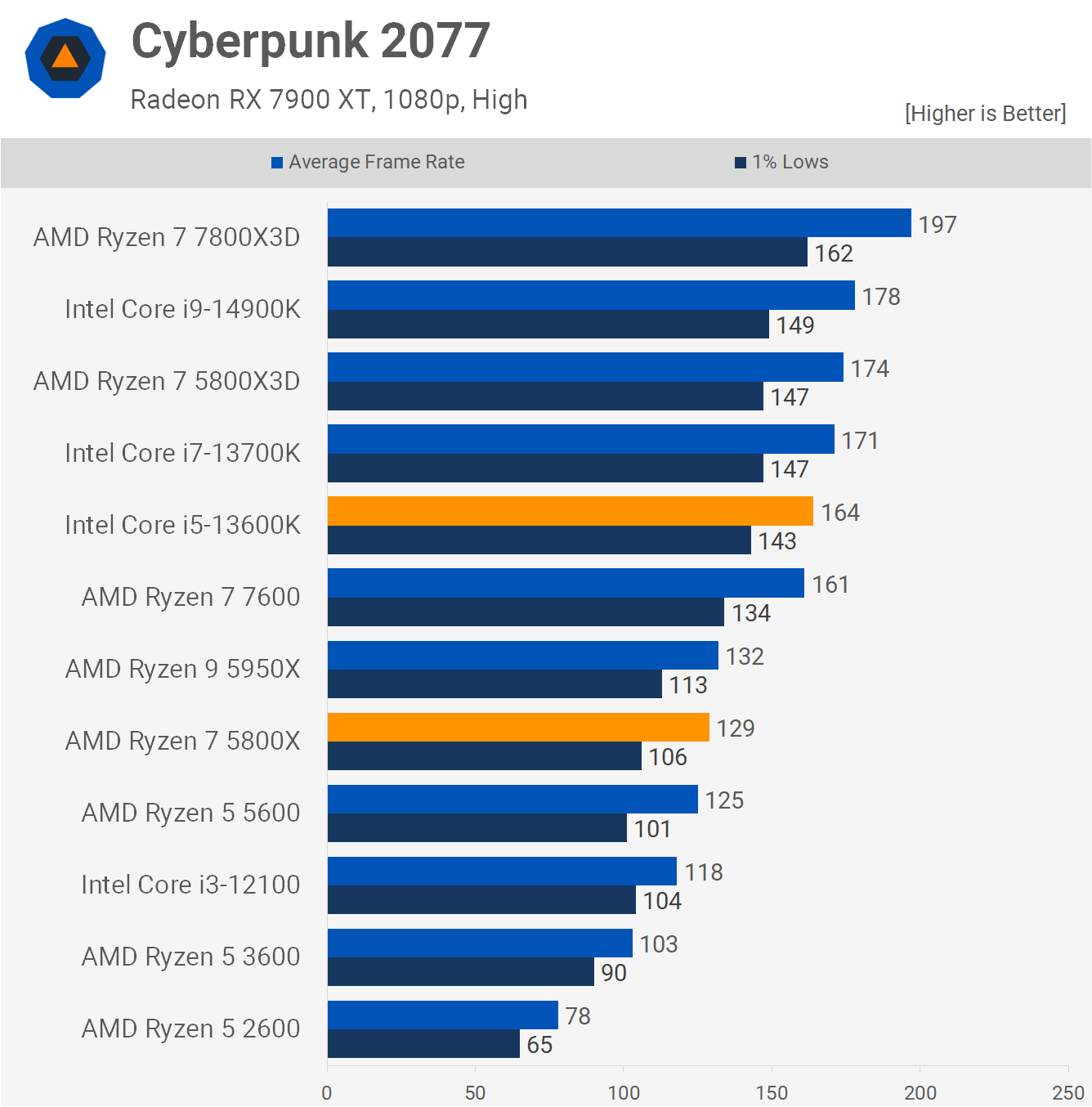

Cyberpunk 2077 is really CPU demanding, or at least it can be when going above 60 fps. We're not even using the 'ultra' preset here as that hammered the frame rate down into the 40s. So, we drop down to the 'high' preset with the RX 6600, and even then, we're left with totally useless data. Oddly, AMD claimed under these conditions that the 5800XT is 12% faster than the 13600K. What the actual f-bomb, AMD? How could that be remotely true?

Making AMD's 12% claim even more insane are the 7900 XT results. Here, the 13600K is 27% faster than the 5800X and 35% faster when looking at the 1% lows. Granted, we are testing the Intel CPUs with high-speed DDR5 memory, but even with the slowest DDR4 memory you can find, the 13600K should still beat the 5800X. In fact, with the 7900 XT, memory speed shouldn't be that crucial.

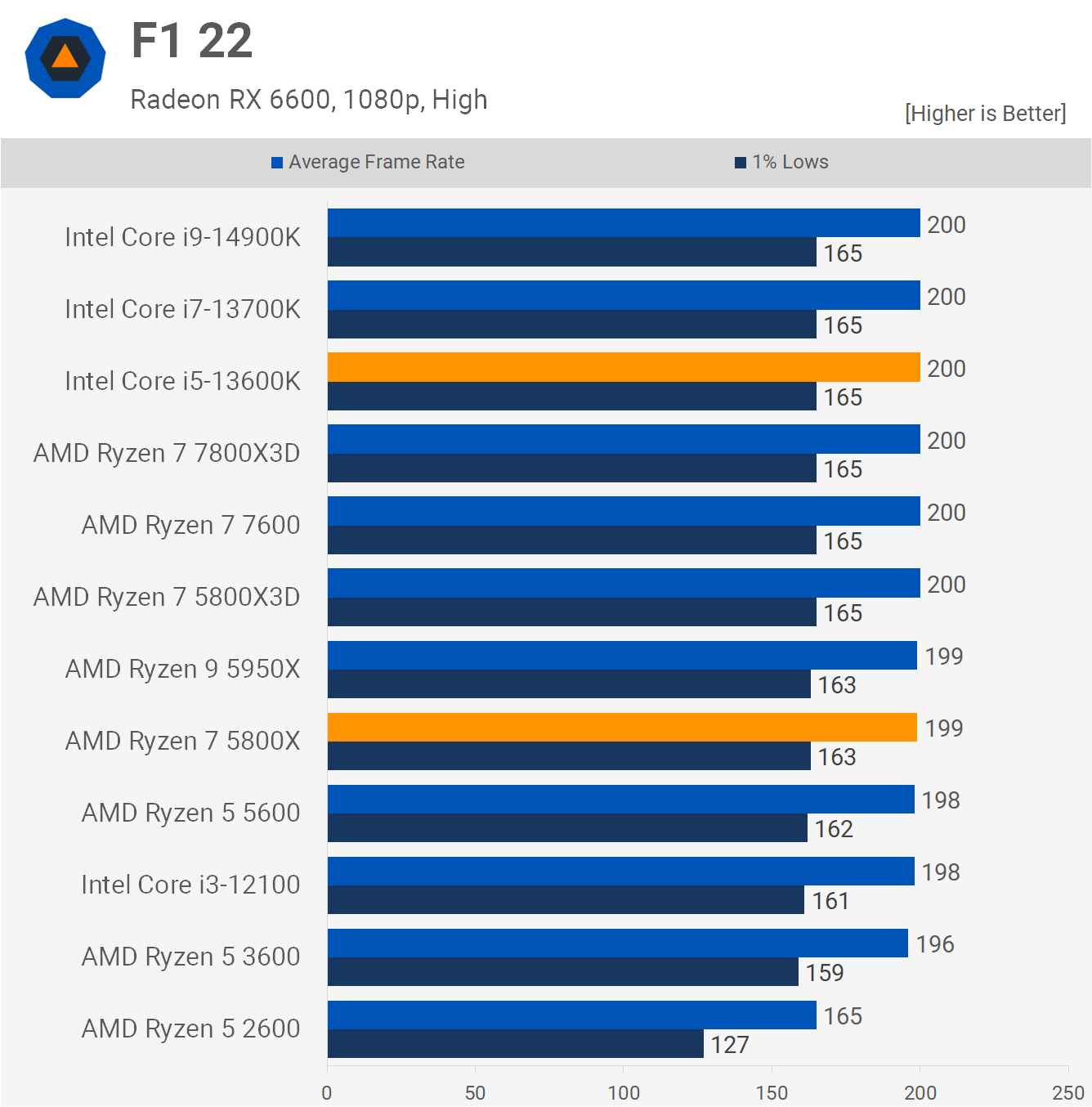

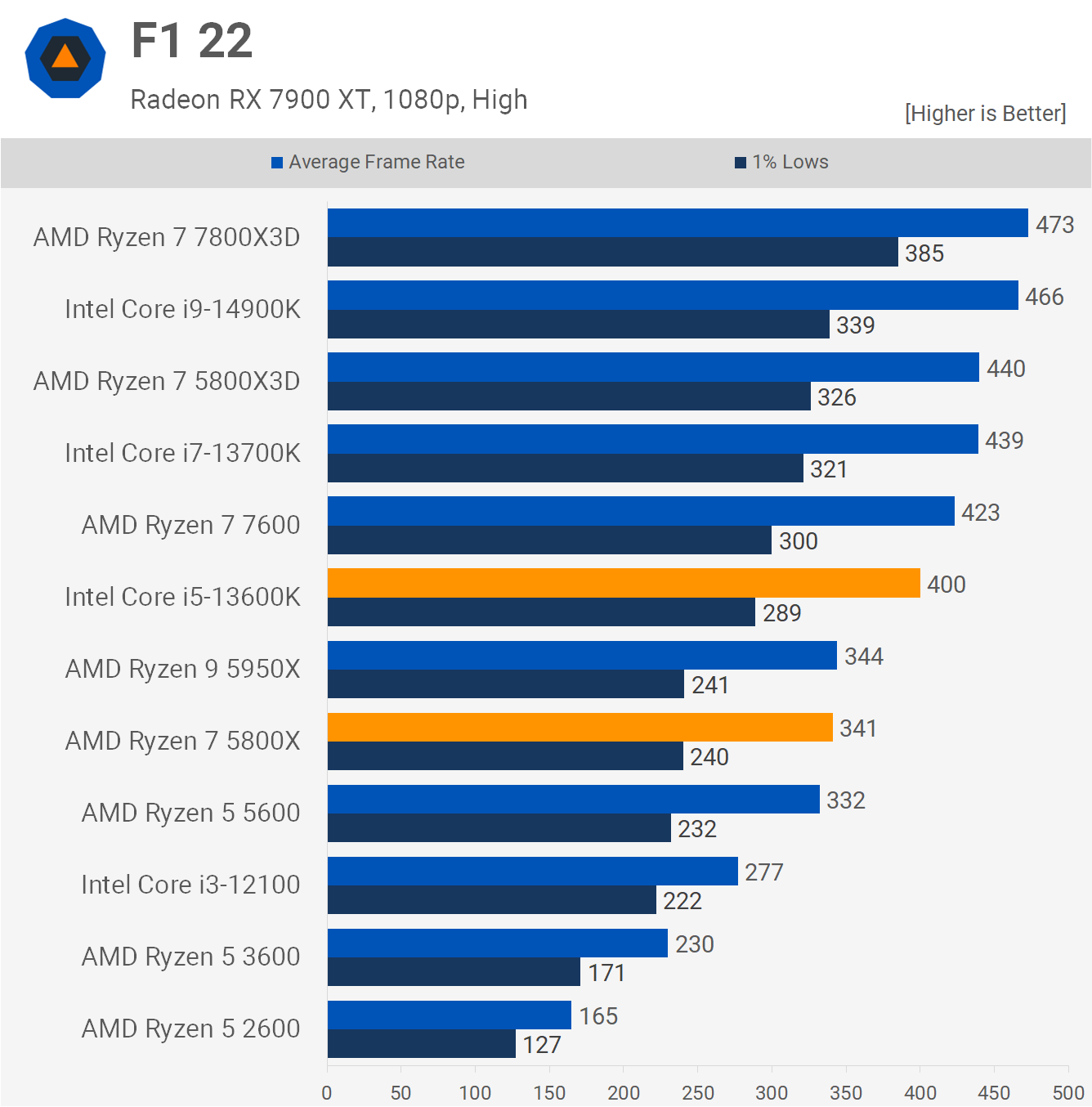

For testing F1 22, we dropped down to the 'high' preset, which disables ray tracing and allows older GPUs like the RX 6600 to render high frame rates at 1080p. Only the Ryzen 5 2600 drops off here; the rest of the pack is heavily GPU limited, so we don't really learn anything here in regards to CPU performance. AMD claimed to have a 1% advantage for both matchups here, but we're not seeing it.

Retesting with the 7900 XT changes things quite a bit. The 13700K is now 28% faster than the 5950X, while the 13600K is 17% faster than the 5800X. So it's a bit odd that AMD would claim to have a small performance advantage in this title when that's not at all the case.

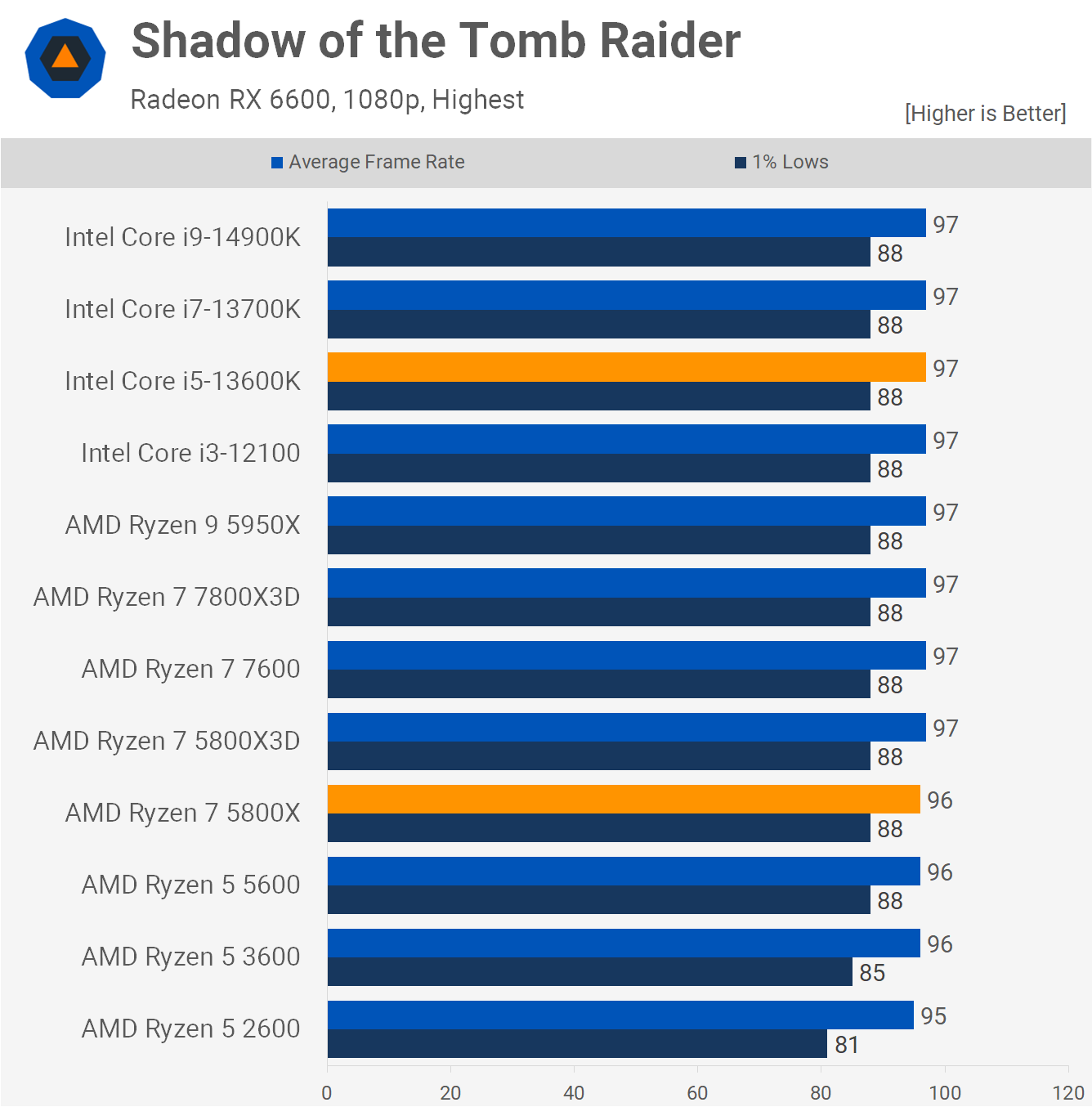

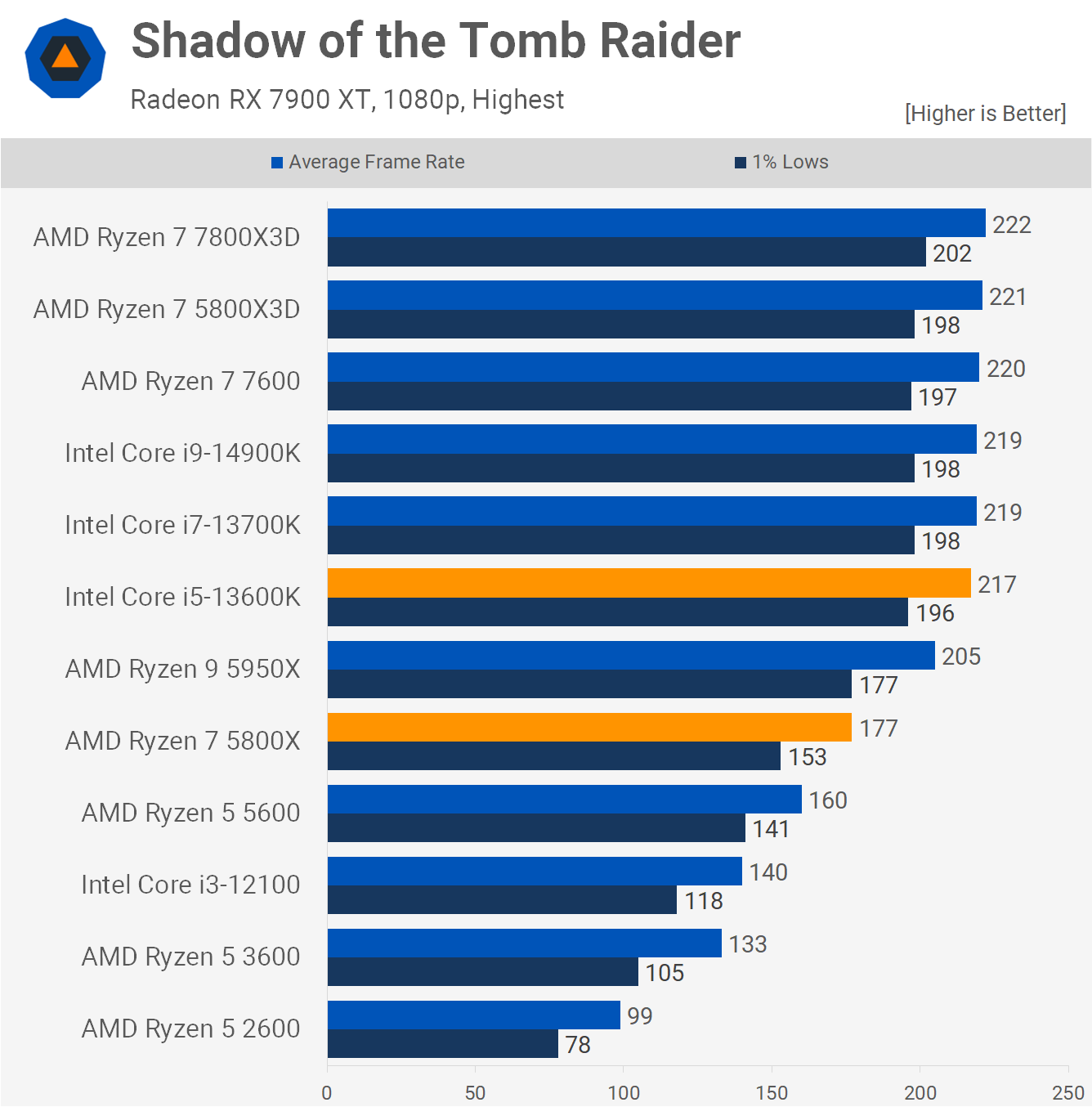

Finally, we have Shadow of the Tomb Raider, and we assume AMD was using the built-in benchmark for this one, which is a terrible CPU test – it's really a GPU benchmark. So we're testing in-game, in the village section, which is substantially more CPU demanding, though you wouldn't really know it with a Radeon RX 6600 as all high-end CPUs were limited to the same 96-97 fps. Again, AMD claimed that the upcoming 5900XT, which is really a 5950X, is 1% faster than the 13700K in this title. So let's take a closer look at that.

The margin isn't huge here, but the 13700K is 7% faster, or 12% if you compare 1% lows. The 5950X actually does very well relative to the 5800X, but the results are miles off what AMD claimed.

Average Performance

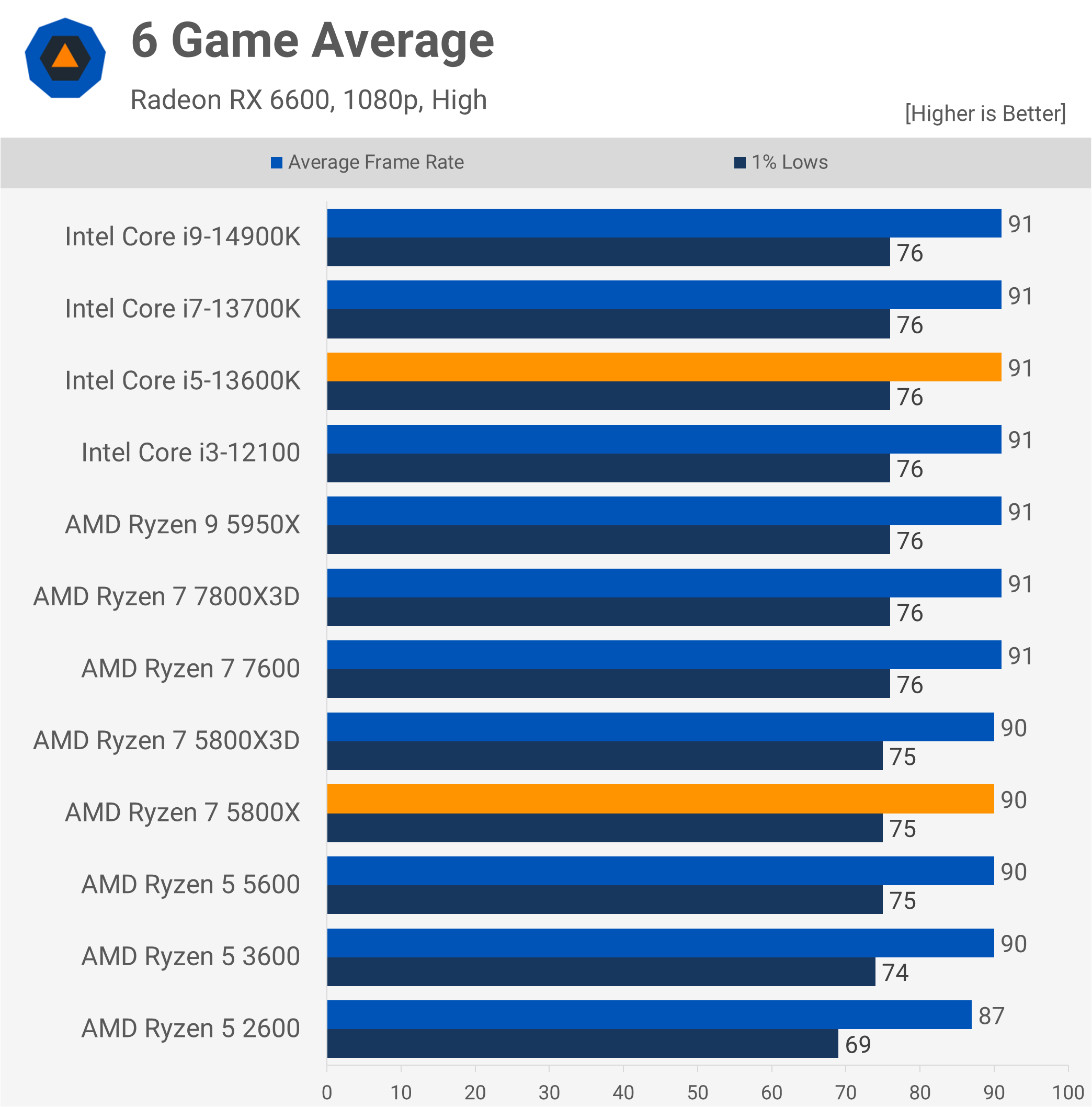

In conclusion, one could say that the 5800X and 13600K are identical for gaming if the goal is to grossly misrepresent gaming performance. Using an RX 6600 and an odd batch of six games, Intel wins by a percent, not AMD winning by 2% as they claimed.

When these CPUs are benchmarked using the same odd batch of six games but with the 7900 XT, the Intel CPU is 13% faster on average. We've shown using more demanding games that the average is more like 28% in Intel's favor, but even the 13% seen here is very different from what AMD claimed.

For the 5950X vs. 13700K, using the RX 6600 and an odd batch of six games, they delivered the same performance.

With the 7900 XT installed, the 13700K was, on average, 16% faster. This isn't quite the 36% we see with the RTX 4090 in more demanding games, but again, it is very different from what AMD showed us.

What We Learned

So there you have it, AMD's bad benchmarks are indeed BAD, and frankly unnecessary. AMD should have just announced the 5900XT and 5800XT and left it at that. There's no need to show gaming performance for Zen 3 processors that we've had for three years now. Everyone knows what they are, and without a hefty price cut, they're not worth buying for gaming. The 5900XT might make sense for productivity, assuming it's much cheaper than the 5950X and you're already on the AM4 platform, but for gaming, surely the 5700X3D for $200 makes much more sense than the 5800XT.

As for benchmarking CPUs with low-end GPUs, we hope we're starting to make some headway here with readers who believe testing with an RTX 4090 at 1080p is misleading, inaccurate, or whatever else they come up with. The idea is to see how many frames each part can output, allowing you to compare their performance and determine which one offers the best value at a given price point.

The idea of testing with a "more realistic" GPU might make sense on the surface, but it's a deeply flawed approach that tells you nothing useful and, if anything, only serves to mislead. Pretending that the Ryzen 7 5800X is just as fast as the Core i7-13700K for gaming might make you feel good about the Ryzen processor, but outside of GPU-limited gaming, it's simply not true.

We also found that the Ryzen 7 7800X3D was no faster than the Core i3-12100 when using the Radeon RX 6600, but we're pretty sure you'll find that the Ryzen 7 processor is indeed much faster for gaming, and it won't take you long to discover this. Anyway, this is not the first time we touch on this subject, so for those yet to be convinced, we doubt we got you this time.

As for AMD, this was an embarrassing and unnecessary marketing blunder, and we're most annoyed by the fact that we now have to benchmark these CPUs when they're released. Ideally, we'd just like to ignore them and call them what they are: the 5900XT is a 5950X, and the 5800XT is a 5800X. But now we'll have to provide benchmarks to prove the obvious. Thanks, AMD. Anyway, we hope you enjoyed those RX 6600 benchmarks – we know we did!