Futurology articles

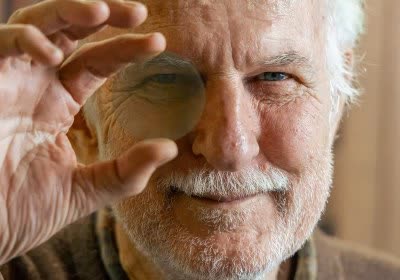

Infrared filter allows everyday eyeglasses to double as night vision lenses

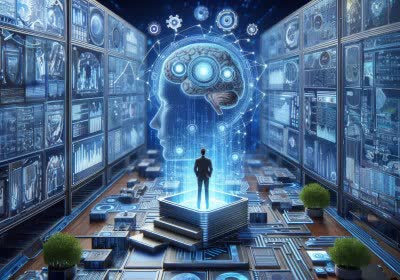

OpenAI launches faster and free GPT-4o model – new voice assistant speaks so naturally you will think it's hoaxed

Forward-looking: OpenAI just introduced GPT-4o (GPT-4 Omni or "O" for short). The model is no "smarter" than GPT-4 but still some remarkable innovations set it apart: the ability to process text, visual, and audio data simultaneously, almost no latency between asking and answering, and an unbelievably human-sounding voice.

Survey reveals almost half of all managers aim to replace workers with AI, could use it to lower wages

A hot potato: A lot of companies try to assuage fears that employees will lose their jobs to AI by assuring them they'll be working alongside the tech, thereby improving efficiency and making their duties less tedious. That claim feels less convincing in light of a new survey that found 41% of managers said they are hoping to replace workers with cheaper AI tools in 2024.

Ventiva shows off tech to keep chips and devices cool

In context: We've noticed a burning sensation in our pockets lately. Not the bite of inflation, but an actual heat source coming from our phones. Maybe it is 5G, maybe it is a design decision made by Apple, or by TSMC, whatever the reason, our smartphone runs hot.

Eco-friendly "water batteries" are cheaper and safer than lithium-ions

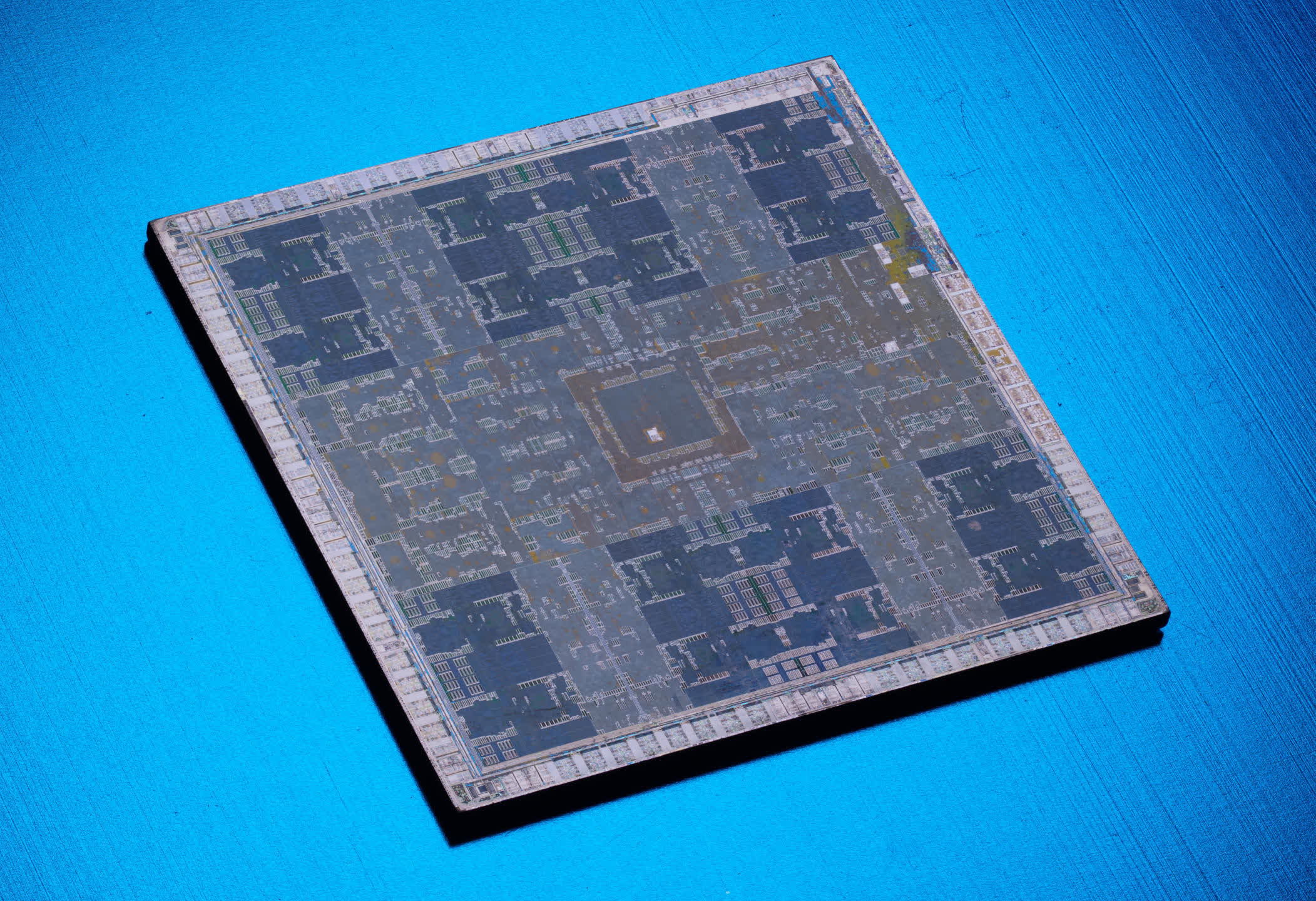

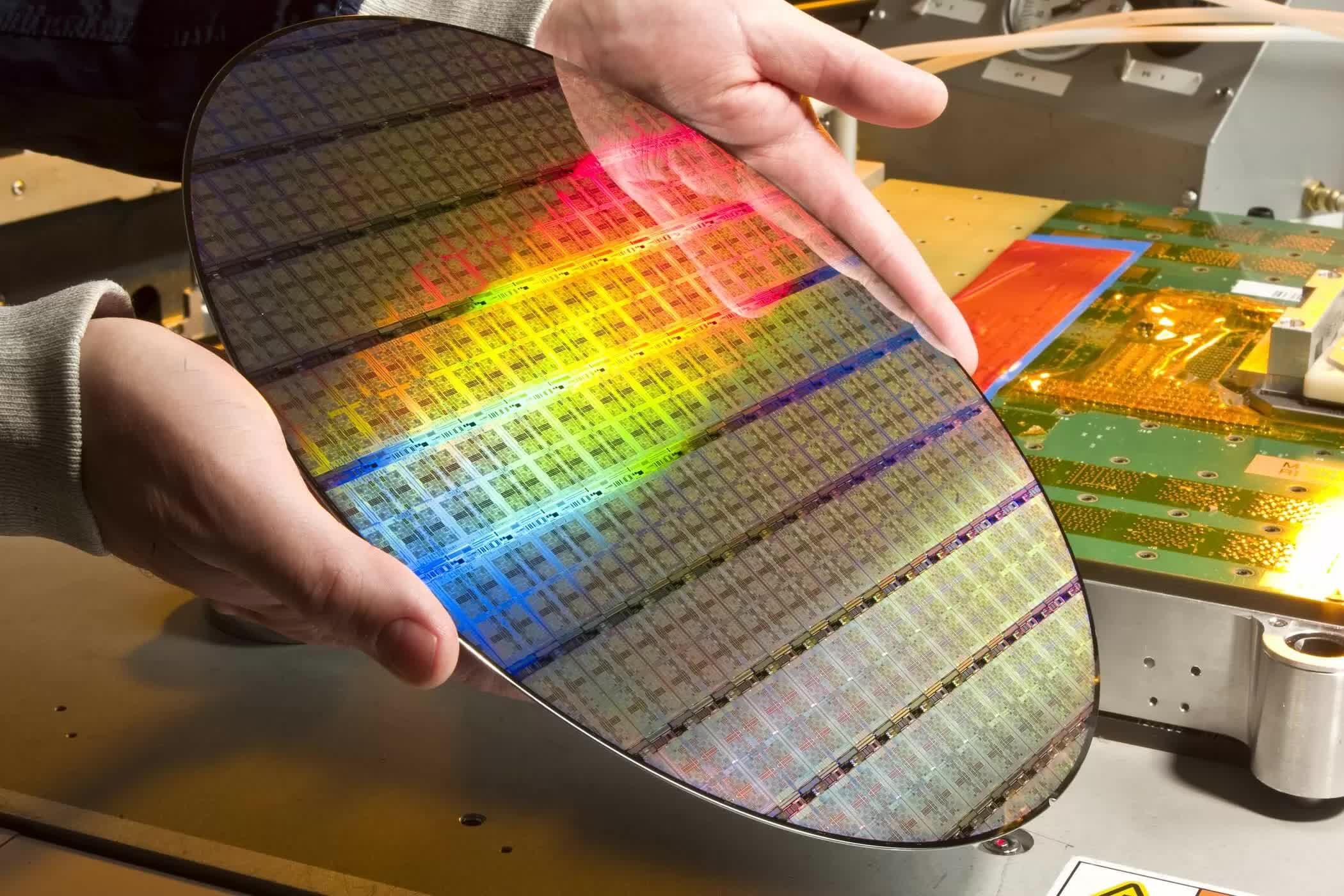

Goodbye to Graphics: How GPUs Came to Dominate Compute and AI

Gone are the days when the sole function for a graphics chip were, graphics. Let's explore how the GPU evolved from a modest pixel pusher into a blazing powerhouse of floating-point computation.

Data Governance and Provenance: Two words that are critical to the future of generative AI

DVD-like optical disc could store 1.6 petabits (or 200 terabytes) on 100 layers

Wrestling with AI and the AIpocalypse we should be worried about

Editor's take: Like almost everyone in tech today, we have spent the past year trying to wrap our heads around "AI". What it is, how it works, and what it means for the industry. We are not sure that we have any good answers, but a few things have been clear. Maybe AGI (artificial general intelligence) will emerge, or we'll see some other major AI breakthrough, but focusing too much on those risks could be overlooking the very real – but also very mundane – improvements that transformer networks are already delivering.

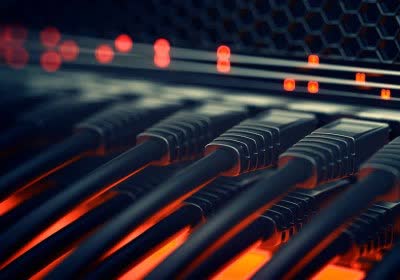

Custom data center reaches 64 gigatransfers per second through optical PCIe 6.0

HaLow Wi-Fi standard achieves 1.8-mile range in field test

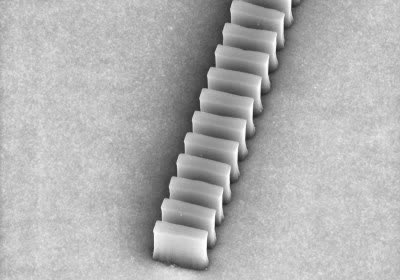

Researchers develop world's first functioning graphene semiconductor

LG shows off 77-inch transparent OLED TV at CES

A new internet standard called L4S aims to lower latency, bring more speed to the web

Researchers achieve data storage breakthrough using diamond defects

Who famously said: "There is no reason anyone would want a computer in their home"?

Opinion: The rapidly evolving state of Generative AI

SoftBank CEO says artificial general intelligence (AGI) will be here in 10 years

Testing Nvidia DLSS 3.5 Ray Reconstruction Using Cyberpunk 2.0

The next iteration of Nvidia's DLSS technology has landed and today we're checking out what DLSS 3.5 is and taking a closer look at ray reconstruction, which has been integrated into Cyberpunk 2.0.

New silicon-based "metasurfaces" can rapidly detect toxins, viruses, cancer, and other diseases

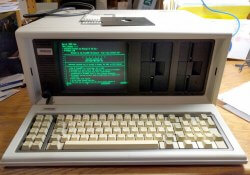

Then and Now: How 30 Years of Progress Have Changed PCs

Over the last 30 years, the PC has utterly transformed in appearance, capability, and usage. From the hulking beige boxes to an astonishing array of powerful, colorful, and astonishing computers.

I got to play with the Apple Vision Pro and saw the future of computing. Again.

Editor's take: I was one of the lucky few who got to attend Apple's WWDC keynote presentation in person, and also got to try the new Apple Vision Pro headset for a 25-minute hands-on, er, heads-on demo. The experience was very good – as it certainly should be for a product that's going to cost a whopping $3,499 – but it was also a bit more similar to other devices I've tried over the years than I initially expected it to be.

What Are Chiplets and Why They Are So Important for the Future of Processors

While chiplets have been in use for decades, today they are the hottest trend in processor tech and at the cutting edge of technology, with millions of people worldwide using them in desktop PCs, workstations, and servers.