Why it matters: Companies involved in PCIe development have been designing optical connectors for the protocol for a while, but DevCon 2024 saw a significant new step toward using them in real-world hardware. Transitioning from CopperLink to optical might become crucial for the massive speed increases expected from PCIe 6.0 and 7.0.

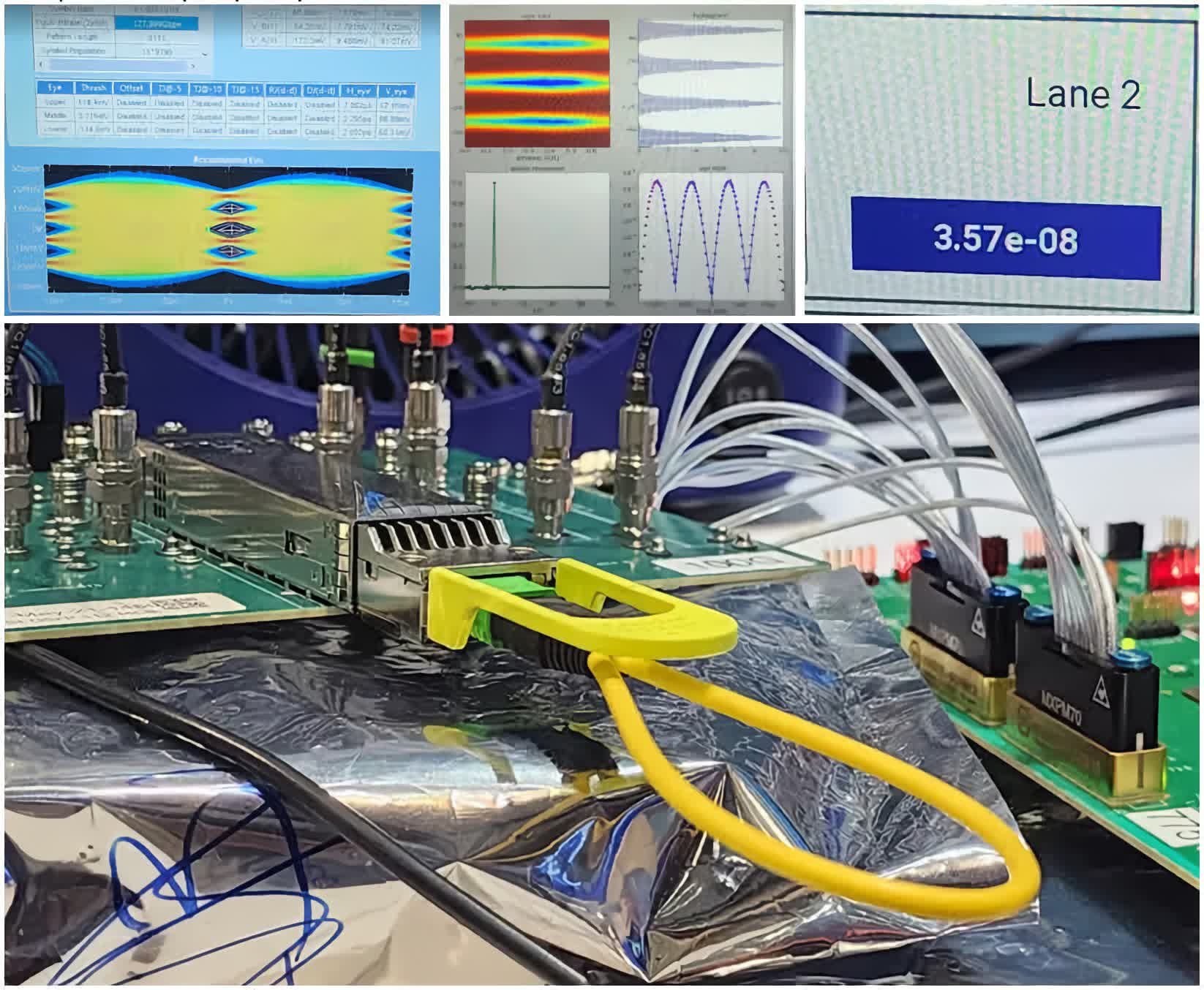

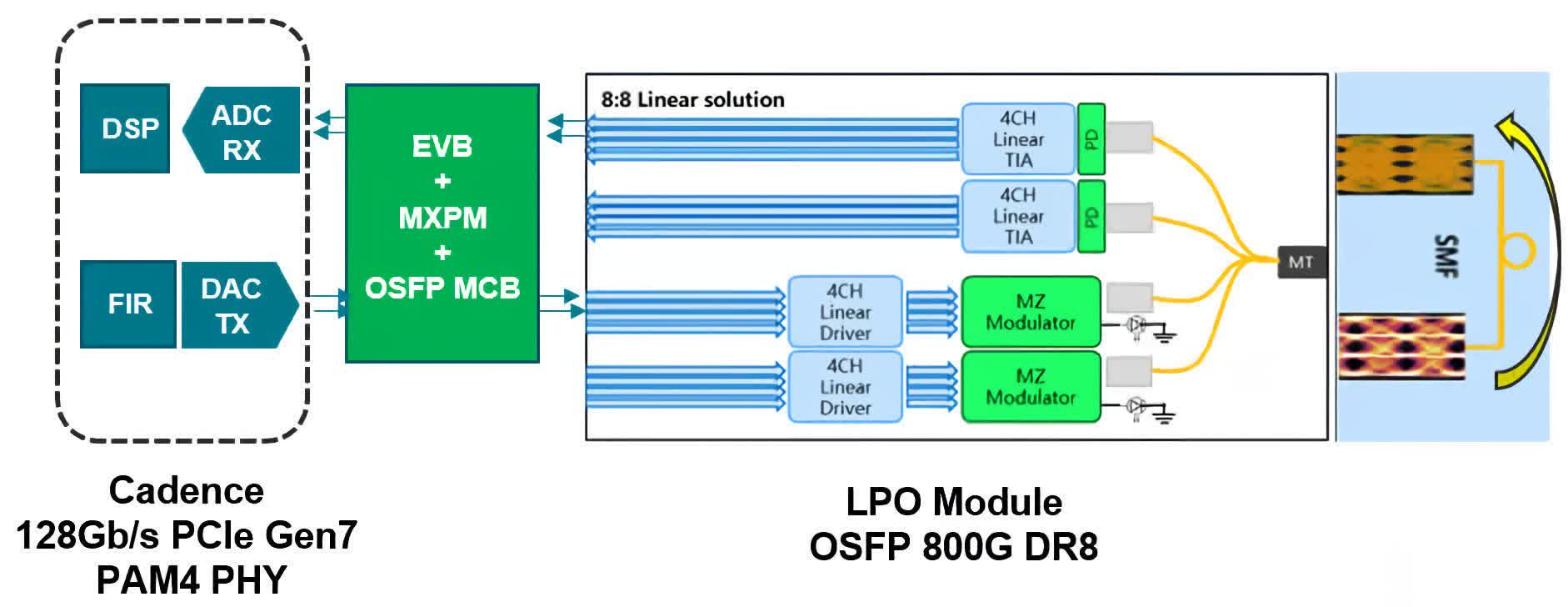

Cadence demonstrated a PCIe 7.0 connection reaching 128 gigatransfers per second (GT/s) using off-the-shelf parts last week at PCI-SIG DevCon 2024. The test marks a significant step forward for optical PCIe connections, the proposed successor to CopperLink.

The demo maintained the connection continuously for over two days – the entirety of the convention – without interruptions. Showing off PCIe 7.0's IX and RX capabilities, Cadence maintained a pre-FEC BER of ~3E-8, providing an ample margin of RS FEC.

Optical PCIe connectors are intended for enterprise applications like hyperscale, cloud computing, HPC, and data centers. As an alternative to CopperLink, they could provide server and data center builders with more options for superior speed and bandwidth.

CopperLink specifications debuted in March and are designed to facilitate 32 or 64 GT/s connections for PCIe 5.0 and 6.0, respectively. Optical technology will facilitate PCIe 6.0 and 7.0, but the blistering 128 GT/s that Cadence demonstrated will only be possible with 7.0.

The PCI-SIG group established a team to explore optical connections back in August 2023, and a broad range of technologies are planned to support PCIe, including pluggable optical transceivers, on-board optics, co-packaged optics, and optical I/O. The final specifications to enhance PCIe electrical through an engineering change request are planned for December 2024.

The most cutting-edge consumer PCs currently utilize PCIe 5.0, most notably to push SSD read speeds over 10 GB/s. The PCI-SIG released the full specifications for PCIe 6.0 early in 2022, and the standard might begin to emerge in enterprise hardware throughout 2024 and 2025.

Meanwhile, PCIe 7.0 draft specifications were updated to version 0.5 during DevCon last week, with final specifications expected to arrive next year. The PCI-SIG group initially intended for real-world hardware to begin appearing in the wild in 2027 but pushed its projection back to 2028.

The PCIe 6.0 and 7.0 specifications should support bandwidth up to 256 GB/s and 512 GB/s, respectively, on x16 lanes. Their innovations also include Pulse Amplitude Modulation with four levels (PAM4), Lightweight Forward Error Correction (FEC), Cyclic Redundancy Check (CRC), and Flow Control Units (Flits). Cadence demonstrated Flits and other new features for PCIe 6.0 at multiple DevCon booths.

PCIe 7.0 achieves impressive 128 GT/s in optical connection demonstration