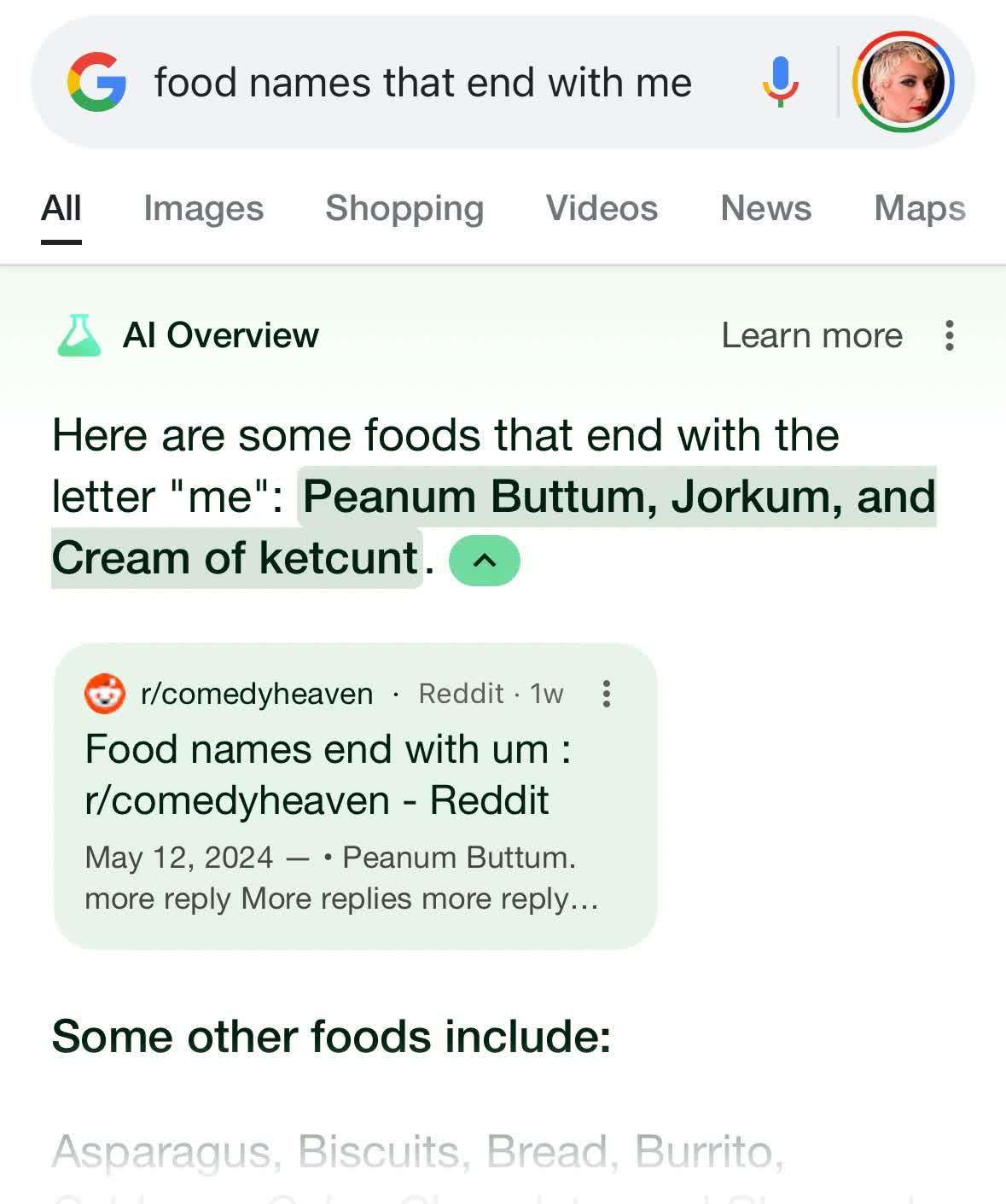

Facepalm: Google's new AI Overviews feature started hallucinating from day one, and more flawed – often hilariously so – results began appearing on social media in the days since. While the company recently tried to explain why such errors occur, it remains to be seen whether a large language model (LLM) can ever truly overcome generative AI's fundamental weaknesses, although we've seen OpenAI's models do significantly better than this.

Google has outlined some tweaks planned for the search engine's new AI Overviews, which immediately faced online ridicule following its launch. Despite inherent problems with generative AI that tech giants have yet to fix, Google intends to stay the course with AI-powered search results.

The new feature, introduced earlier this month, aims to answer users' complex queries by automatically summarizing text from relevant hits (which most website publishers are simply calling "stolen content"). But many people immediately found that the tool can deliver profoundly wrong answers, which quickly spread online.

Google has offered multiple explanations behind the glitches. The company claims that some of the examples posted online are fake. Still, others stem from issues such as nonsensical questions, lack of solid information, or the AI's inability to detect sarcasm and satire. Rushing to the market with an unfinished product was the obvious explanation not offered by Google though.

Rushing to the market with an unfinished product was the obvious explanation not offered by Google though.

For example, in one of the most widely shared hallucinations, the search engine suggests users should eat rocks. Because virtually no one would seriously ask that question, the only strong result on the subject that the search engine could find was an article originating from the satire website The Onion suggesting that eating rocks is healthy. The AI attempts to build a coherent response no matter what it digs up, resulting in absurd output.

Google AI overview is a nightmare. Looks like they rushed it out the door. Now the internet is having a field day. Heres are some of the best examples https://t.co/ie2whhQdPi

– Kyle Balmer (@iamkylebalmer) May 24, 2024

The company is trying to fix the problem by improving the restrictions on what the search engine will answer and where it pulls information. It should begin avoiding spoof sources and user-generated content, and won't generate AI responses to ridiculous queries.

Google's explanation might explain some user complaints, but plenty of cases shared on social media show the search engine failing to answer perfectly rational questions correctly. The company claims that the humiliating examples spread online represent a loud minority. This may be a fair point, as users rarely discuss a tool when it works properly, although we've seen plenty of those success stories spreading online due to the novelty behind genAI and tools like ChatGPT.

Google has reiterated its claim that AI Overviews drive more clicks toward websites despite claims that reprinting information in the search results drives traffic away from its source. The company said that users who find a page through AI Overviews tend to stay there longer, calling the clicks "higher quality." Third-party web traffic metrics will likely need to test Google's assertions.